Breathing & Valsalva Cue

June 2025 - present

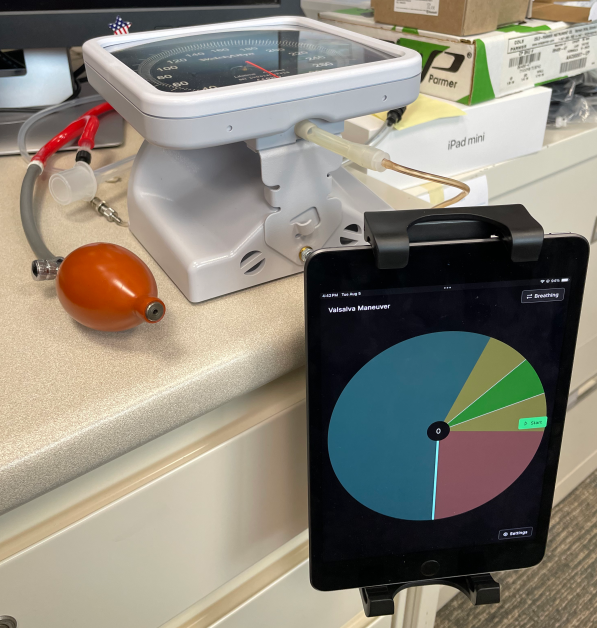

This project replaces outdated or non-specialized patient cueing equipment in the Mayo Clinic autonomic testing labs. An analog light box was previously used for deep breathing exercises, but this is no longer manufactured by the original supplier, and it was not customizable to display at different sizes and speeds. A sphygmometer (pressure dial from 0 to 320mmHg) was previously used for the Valsalva maneuver, which has a patient blow into a tube and maintain pressure for 15 seconds. However, a patient is only expected to blow to 40mmHg, which the sphygmometer is not designed to illustrate.

I designed and developed a web application using the Angular framework (TypeScript) and communicated with a Bluetooth pressure transducer using the Web Bluetooth API.

NutriBot

February 2025 - May 2025

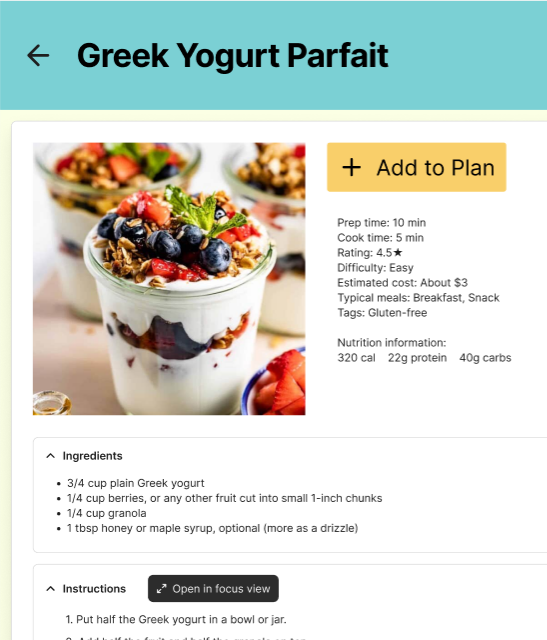

Many people have a food budget, a nutritional mindset, or just little time to cook, but traditional meal planners are often too intrusive and time-consuming.

In the Human-Computer Interaction class at the University of St. Thomas, I worked in a team with Daniel Bauer, Eli Goldberger, and Matthew Thao to more thoroughly define this problem by gathering experiences from real target users. Then we iteratively designed the user experience for a desktop website that would solve this issue, which we titled "NutriBot".

Object Detection in Autonomous Vehicles

May 2023 - August 2024

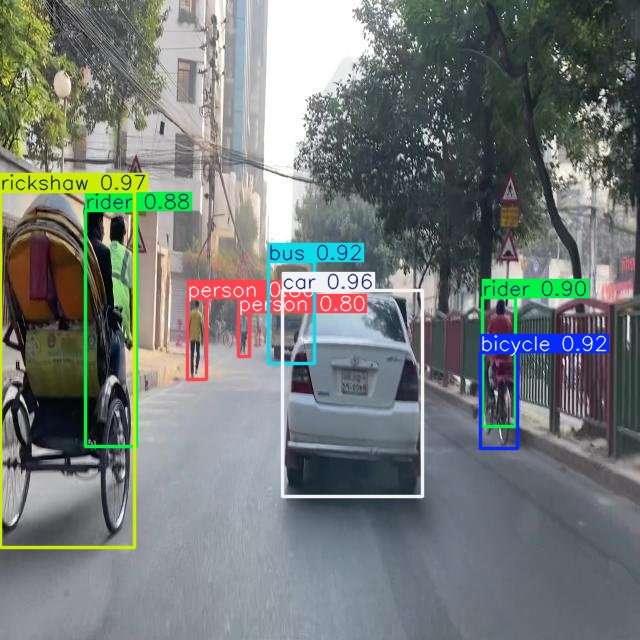

Autonomous vehicles have mixed public reception, in part because their object detection process isn't as accurate on pedestrians and bicycles. Many models are also exclusively trained on Western-representative datasets.

I worked with Dr. Pakeeza Akram at the University of St. Thomas to research prior work in the field and train a family of object detection models (named "YOLO") on the Dhaka Occluded Objects dataset. We then presented the results at the 2023 and 2024 Inquiry Poster Session at the University of St. Thomas. The research may be published at some point in the future.